Introduction

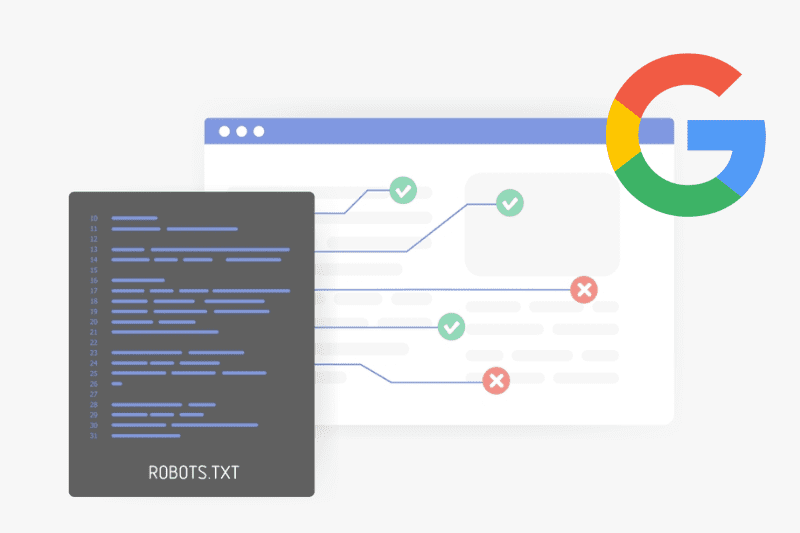

In the realm of website management and search engine optimization (SEO), the robots.txt file plays a crucial role. It serves as a communication tool between website owners and search engine crawlers, guiding them on which parts of the site to crawl and index.

In this blog, we will delve into the world of robots.txt, exploring its syntax, its significance in website management, and how to effectively implement it to optimize your online presence.

What is Robots.txt?

The robots.txt file is a text file placed in the root directory of a website that provides instructions to search engine crawlers. It informs search engines about which pages or directories they are allowed to access and crawl. By controlling crawler behavior, website owners can better manage the visibility and indexing of their content.

Syntax and Structure of Robots.txt

To understand the robots.txt file, let’s break down its syntax and structure. The file consists of lines containing user-agents and directives. User-agents specify which search engines or bots the directives apply to, and directives indicate actions to be taken.

User-agent:

- User-agent: * (Applies to all search engines)

- User-agent: Googlebot (Applies specifically to Google’s crawler)

Directives:

- Disallow: This directive informs search engines which directories or pages should not be crawled or indexed. For example:

- Disallow: /private/ (Prevents crawling of the “/private/” directory)

- Disallow: /images/ (Prevents crawling of the “/images/” directory)

- Allow: This directive overrides a preceding “Disallow” directive for specific pages or directories. It allows search engines to crawl and index content that was otherwise disallowed. For example:

- Disallow: /private/

- Allow: /private/page.html (Allows crawling of the specific page “/private/page.html” within the disallowed directory)

- Sitemap: This directive specifies the location of the XML sitemap file, helping search engines discover and crawl your website more efficiently. For example:

- Sitemap: https://www.example.com/sitemap.xml

Controlling Search Engine Crawlers:

By using directives in the robots.txt file, website owners can control search engine crawlers’ behavior:

- Disallow specific directories or pages to prevent search engines from crawling and indexing sensitive or irrelevant content.

- Allow or disallow specific search engines or user-agents to define access permissions for different crawlers.

Effective Use Cases for Robots.txt:

Robots.txt offers several use cases to optimize website crawling and indexing:

- Improve SEO performance by allowing search engines to focus on essential content and avoid crawling low-value pages.

- Protect sensitive information by preventing search engines from accessing directories or pages that contain confidential data.

- Handle duplicate content by specifying which version should be crawled and indexed to avoid potential SEO issues.

Best Practices for Robots.txt Implementation:

To make the most of robots.txt, consider the following best practices:

- Create a Robots.txt File: Start by creating a robots.txt file using a text editor and save it as “robots.txt” in the root directory of your website.

- Use a Disallow All Directive: Include a “User-Agent: *” line followed by “Disallow: /” to disallow all crawlers from accessing your website. This can help protect your site during development or when you want to keep it hidden from search engines.

- Test and Validate: Utilize online tools or search engine-specific testing tools to validate your robots.txt file’s correctness and effectiveness. Ensure that it doesn’t inadvertently block important pages or directories.

Robots.txt and SEO Strategy:

Robots.txt is an integral part of an effective SEO strategy:

- Improved Rankings and Visibility: Properly managing crawler access ensures search engines focus on the most valuable content, leading to improved rankings and visibility in search results.

- Alignment with Search Engine Guidelines: Following best practices and adhering to search engine guidelines through the use of robots.txt can enhance your website’s credibility and compliance with search engine standards.

Robots.txt Explainer Video

Conclusion:

The robots.txt file acts as a powerful tool in website management, allowing you to communicate with search engine crawlers and control their access to your site. By understanding the syntax, implementing best practices, and aligning it with your SEO strategy, you can optimize your website’s visibility, protect sensitive content, and improve overall search engine performance.

Take advantage of robots.txt to establish a strong online presence while ensuring search engine crawlers navigate your site in the most efficient and effective manner.

Frequently Asked Questions (FAQs) about Robots.txt:

A robots.txt file is a text file placed in the root directory of a website that provides instructions to search engine crawlers. It informs search engines about which parts of the site they are allowed to crawl and index.

When search engine crawlers visit a website, they first check the robots.txt file to understand which directories or pages they should or should not crawl. The directives in the robots.txt file guide the crawlers’ behavior.

Yes, you can use the “Disallow: /” directive to prevent search engines from crawling and indexing your entire website. However, keep in mind that this may have unintended consequences and should be used with caution.

Yes, you can use the “Disallow” directive to prevent search engines from crawling and indexing specific pages or directories that you don’t want to be publicly accessible.

When search engine crawlers encounter conflicting directives, they generally follow the most specific directive. For example, if a page is disallowed by one directive but allowed by another, it will typically be disallowed.

Yes, you can use the “” wildcard character to match any sequence of characters in a URL. For example, “Disallow: /images/.jpg” would disallow crawling of all JPEG images in the “/images/” directory.

You can use online tools or search engine-specific testing tools to validate the correctness and effectiveness of your robots.txt file. These tools can help you identify any syntax errors or unintended blocks.

Including a sitemap directive in your robots.txt file is not mandatory, but it can help search engines discover and crawl your website more efficiently. Specify the location of your XML sitemap using the “Sitemap” directive.

Yes, you can edit and update your robots.txt file as needed. However, it’s important to ensure that any changes you make do not unintentionally block search engine crawlers from accessing important pages or directories.

Remember, the proper implementation and maintenance of your robots.txt file can have a significant impact on how search engines crawl and index your website. Always follow best practices and consider consulting SEO professionals for specific advice related to your website.